We have identified an issue with the way lancache handles the CACHE_MEM_SIZE/CACHE_INDEX_SIZE environment variables. If you are running a cache with more than 2tb of CACHE_DISK_SIZE we suggest you immediately do the following

- Check the value of CACHE_MEM_SIZE/CACHE_INDEX_SIZE in your .env. We now recommend this should be ~250m per 1TB of cache space

- Update Lan Cache (./update-containers.sh if you are using the compose)

More Detail:

Over the last 24 hours we have been diagnosing an issue where some caches were not filling their allocated CACHE_DISK_SIZE and started purging data out after ~2.5TB. This led to the discovery of an issues within our documentation and regression handling.

Issue 1: CACHE_MEM_SIZE vs CACHE_INDEX_SIZE

In October we updated the slightly confusing environment variable CACHE_MEM_SIZE to the more accurate CACHE_INDEX_SIZE. In order to prevent this breaking auto update scripts we have a deprecation check which is supposed to copy the value of CACHE_MEM_SIZE into CACHE_INDEX_SIZE on boot. Unfortunately in one of the two places this check is performed the script was erroring so all caches using the legacy CACHE_MEM_SIZE were being overwritten with the default CACHE_INDEX_SIZE of 500m.

This issue has now been resolved and we are looking at how to improve the deprecation handling and avoid these duplication errors in the future

Issue 2: How much CACHE_INDEX_SIZE do you actually need

CACHE_INDEX_SIZE is the amount of memory allocated to nginx to store the index of cache data. Our original recommendation in the documentation allowed 1000mb which is enough to store 8 million files (or 8TB of cache data). At some point we reduced this recommendation to 500mb which we felt should still have been sufficient to handle an average 4tb cache.

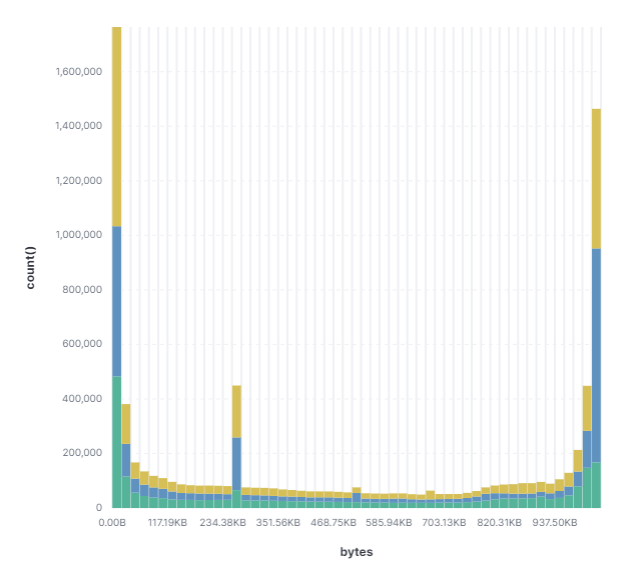

This recommendation is based on the assumption that the cache is full of 1mb slices. What we realised as part of our investigations into the cache not filling sufficiently is that the distribution of file sizes within the cache is actually a reverse bell curve, with much larger than expected numbers of ~<1kb files in addition to the expected large numbers of 1mb slices.

Based on analysis of real world usage across all CDN’s, we have now adjusted the recommendation to 250m per 1TB of cache data which allows 2 million files (at an average file size of 500kb). This should cover the variance with some headroom to spare.